▲圖片來源:dataconomy

By eliminating background noise and ensuring that its microphones only pick up the caller’s voice, new AI-powered wireless headphones called ClearBuds provide a potential answer because there is nothing worse than one team member calling into a Zoom conference from a busy cafe.

Many devices currently utilize speech-enhancement technologies, including wireless headphones like Apple’s AirPods Pro and teleconferencing services like Zoom and Google Meet. With either conventional signal-processing algorithms or more recent machine learning techniques, the objective is to remove any undesired noise or distortions from incoming audio and improve the clarity of the speaker’s voice.

“CLEARBUDS WERE BORN OUT OF NECESSITY”

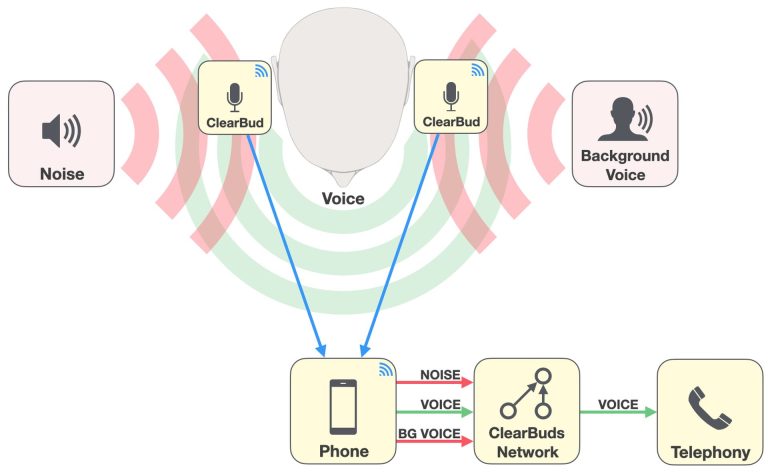

ClearBuds operate by utilizing acoustic information that can discriminate between various noise types, such as voice or traffic sounds, or spatial cues that help separate audio sources. It is a considerable problem to do both simultaneously and with a computational budget small enough to run on consumer-grade devices. Most real-world systems still have a lot of room for improvement.

A team from the University of Washington has developed a device called ClearBuds that eliminates background noise using a brilliant combination of custom-made in-ear wireless headphones, a unique Bluetooth protocol, and a lightweight deep-learning model that can run on a smartphone.

Ishan Chatterjee, a doctorate student and one of the joint authors of a paper published at the ACM International Conference on Mobile Systems, Applications, and Services explaining the concept, says that:

“For us, ClearBuds was born out of necessity. Like many others, when the pandemic lockdown started, we found ourselves taking many calls within our house within these close quarters. There were many noises around the house, kitchen noises, construction noises, conversations.”

He not only attends the same classes as the other two co-authors, Ph.D. candidates Vivek Jayaram and Maruchi Kim, but they also share a room.

So they decided to pool their experience in hardware, networking, and machine learning to solve the problem.

▲ClearBuds operate by utilizing acoustic information that can discriminate between various noise types(來源:dataconomy)

According to Jayaram, multiple voices must be distinguished, which is one of the main problems with speech enhancement. While more current machine learning techniques have improved at differentiating sounds and using this to cancel out background noise, they still have trouble when two individuals are conversing simultaneously.

The simplest technique to deal with this issue is to triangulate the sources of various noises using multiple microphones that are somewhat spaced apart. As a result, it is possible to discriminate between two speakers based on their location instead of how they sound. But for this to work, the microphones must be set apart enough.

The majority of consumer goods come with microphones in each earbud, which should be spaced apart sufficiently to provide accurate triangulation. But according to Kim, streaming and synchronizing audio from both is outside the scope of current Bluetooth standards. Because of this, Apple’s AirPods and expensive hearing aids contain numerous microphones in each earbud, enabling them to do a limited triangulation before streaming from one earbud down to the connected smartphone.

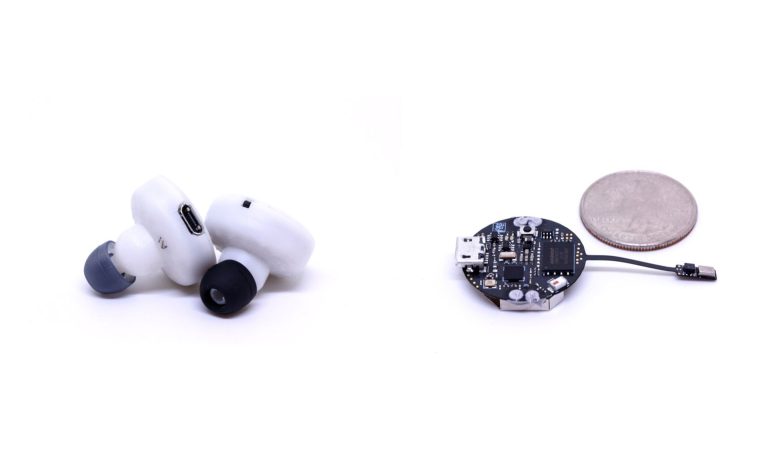

The researchers created a unique wireless protocol to allow one of the earphones to emit a time-sync beacon to get around this. The second earbud then uses this signal to sync its internal clock with the first, maintaining the timing of the two audio streams. When designing ClearBuds, the team 3D printed the enclosures for the custom-designed earphones they used to implement this protocol. However, synchronizing the feeds from each earbud only partially fixed the issue.

▲When designing ClearBuds, the team 3D printed the enclosures for the custom-designed earphones(來源:dataconomy)

The researchers needed to run their speech-enhancement software on the smartphone coupled with the headphones to process the audio using the newest deep-learning techniques. Most commercial applications using AI for voice augmentation rely on sending the audio to the cloud servers because these models have large computing expenditures. Even the most recent smartphones have a fraction of the computing capacity of GPU cards, which are frequently used to execute deep learning, claims Jayaram.

As a result, they were able to locate the source by using an already-existing neural network that can be trained to recognize timing variations between two incoming signals. Then, in order for it to function on a smartphone, they stripped this down to its core essentials by lowering the number of parameters and layers. The researchers fed the network’s output into another network that learns to filter out these types of distortions because stripping back the network in this way resulted in a substantial loss in audio quality and the introduction of crackles, static, and pops.

“The innovation was combining two different types of neural networks, each of which could be very lightweight, and in conjunction, they could approach the performance of these really big neural networks that couldn’t run on an iPhone,” explains Jayaram.

▲Participants rated audio recordings from noisy real-world settings like crowded crossroads or loud diners(來源:dataconomy)

Compared to the Apple AirPods Pro, the ClearBuds consistently outperformed them in signal-to-distortion ratio. Additionally, 37 participants rated audio recordings taken from noisy real-world settings like crowded crossroads or loud diners. The ones analyzed by ClearBuds’ neural network were discovered to have the best overall noise cancellation. In practical tests, eight participants found that the ClearBuds were much more preferable to the call-making equipment they typically used.

According to Tillman Weyde, a reader in machine learning at the City University of London, the output does have some distortions. Still, overall, the algorithm is very good at eliminating background noise and voices. He continues, “This is a great result from a student and academic team that has obviously put a tremendous amount of work into this project to make effective progress on a problem that affects hundreds of millions of people using wireless earbuds.”

▲“The algorithm is very good at eliminating background noise and voices”(來源:dataconomy)

The work is really good, but one constraint is that it takes 109 milliseconds to transmit the audio to the smartphone and then process it, according to Alexandre Défossez, a research scientist at Facebook AI Research Paris.

“We always get 50 to 100 milliseconds of latency from the network. Adding an extra 100 milliseconds is a big price to pay. As the communication stack becomes smarter and smarter, we will end up with fairly noticeable and annoying delays in all our communications,” he explains.

轉貼自: dataconomy.com

若喜歡本文,請關注我們的臉書 Please Like our Facebook Page: Big Data In Finance

留下你的回應

以訪客張貼回應