摘要: Last year, San Francisco-based research lab OpenAI released Codex, an AI model for translating natural language commands into app code. The model, which powers GitHub’s Copilot feature, was heralded at the time as one of the most powerful examples of machine programming, the category of tools that automates the development and maintenance of software. Not to be outdone, DeepMind — the AI lab backed by Google parent company Alphabet — claims to have improved upon Codex in key areas with AlphaCode, a system that can write “competition-level” code.

In programming competitions hosted on Codeforces, a platform for programming contests, DeepMind claims that AlphaCode achieved an average ranking within the top 54.3% across 10 recent contests with more than 5,000 participants each.

DeepMind principal research scientist Oriol Vinyals says it’s the first time that a computer system has achieved such a competitive level in all programming competitions. “AlphaCode [can] read the natural language descriptions of an algorithmic problem and produce code that not only compiles, but is correct,” he added in a statement. “[It] indicates that there is still work to do to achieve the level of the highest performers, and advance the problem-solving capabilities of our AI systems. We hope this benchmark will lead to further innovations in problem-solving and code generation.”

LEARNING TO CODE WITH AI

Machine programming been supercharged by AI over the past several months. During its Build developer conference in May 2021, Microsoft detailed a new feature in Power Apps that taps OpenAI’s GPT-3 language model to assist people in choosing formulas. Intel’s ControlFlag can autonomously detect errors in code. And Facebook’s TransCoder converts code from one programming language into another.

The applications are vast in scope — explaining why there’s a rush to create such systems. According to a study from the University of Cambridge, at least half of developers’ efforts are spent debugging, which costs the software industry an estimated $312 billion per year. AI-powered code suggestion and review tools promise to cut development costs while allowing coders to focus on creative, less repetitive tasks — assuming the systems work as advertised.

Like Codex, AlphaCode — the largest version of which contains 41.4 billion parameters, roughly quadruple the size of Codex — was trained on a snapshot of public repositories on GitHub in the programming languages C++, C#, Go, Java, JavaScript, Lua, PHP, Python, Ruby, Rust, Scala, and TypeScript. AlphaCode’s training dataset was 715.1GB — about the same size as Codex’s, which OpenAI estimated to be “over 600GB.”

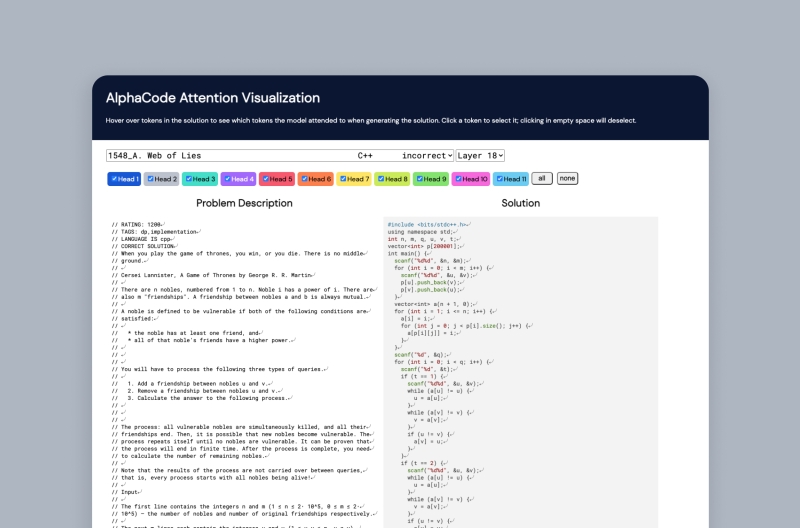

▲An example of the interface that AlphaCode used to answer programming challenges.

In machine learning, parameters are the part of the model that’s learned from historical training data. Generally speaking, the correlation between the number of parameters and sophistication has held up remarkably well.

Architecturally, AlphaCode is what’s known a Transformer-based language model — similar to Salesforce’s code-generating CodeT5. The Transformer architecture is made up of two core components: an encoder and a decoder. The encoder contains layers that process input data, like text and images, iteratively layer by layer. Each encoder layer generates encodings with information about which parts of the inputs are relevant to each other. They then pass these encodings to the next layer before reaching the final encoder layer.

CREATING A NEW BENCHMARK

Transformers typically undergo semi-supervised learning that involves unsupervised pretraining, followed by supervised fine-tuning. Residing between supervised and unsupervised learning, semi-supervised learning accepts data that’s partially labeled or where the majority of the data lacks labels. In this case, Transformers are first subjected to “unknown” data for which no previously defined labels exist. During the fine-tuning process, Transformers train on labeled datasets so they learn to accomplish particular tasks like answering questions, analyzing sentiment, and paraphrasing documents.

In AlphaCode’s case, DeepMind fine-tuned and tested the system on CodeContests, a new dataset the lab created that includes problems, solutions, and test cases scraped from Codeforces with public programming datasets mixed in. DeepMind also tested the best-performing version of AlphaCode — an ensemble of the 41-billion-parameter model and a 9-billion-parameter model — on actual programming tests on Codeforces, running AlphaCode live to generate solutions for each problem.

On CodeContests, given up to a million samples per problem, AlphaCode solved 34.2% of problems. And on Codeforces, DeepMind claims it was within the top 28% of users who’ve participated in a contest within the last six months in terms of overall performance.

“The latest DeepMind paper is once again an impressive feat of engineering that shows that there are still impressive gains to be had from our current Transformer-based models with ‘just’ the right sampling and training tweaks and no fundamental changes in model architecture,” Connor Leahy, a member of the open AI research effort EleutherAI, told VentureBeat via email. “DeepMind brings out the full toolbox of tweaks and best practices by using clean data, large models, a whole suite of clever training tricks, and, of course, lots of compute. DeepMind has pushed the performance of these models far faster than even I would have expected. The 50th percentile competitive programming result is a huge leap, and their analysis shows clearly that this is not ‘just memorization.’ The progress in coding models from GPT3 to codex to AlphaCode has truly been staggeringly fast.”

LIMITATIONS OF DEEPMIND CODE GENERATION

Machine programming is by no stretch a solved science, and DeepMind admits that AlphaCode has limitations. For example, the system doesn’t always produce code that’s syntactically correct for each language, particularly in C++. AlphaCode also performs worse at generating challenging code, such as that required for dynamic programming, a technique for solving complex mathematical problems.

AlphaCode might be problematic in other ways, as well. While DeepMind didn’t probe the model for bias, code-generating models including Codex have been shown to amplify toxic and flawed content in training datasets. For example, Codex can be prompted to write “terrorist” when fed the word “Islam,” and generate code that appears to be superficially correct but poses a security risk by invoking compromised software and using insecure configurations.

Systems like AlphaCode — which, it should be noted, are expensive to produce and maintain — could also be misused, as recent studies have explored. Researchers at Booz Allen Hamilton and EleutherAI trained a language model called GPT-J to generate code that could solve introductory computer science exercises, successfully bypassing a widely-used programming plagiarism detection software. At the University of Maryland, researchers discovered that it’s possible for current language models to generate false cybersecurity reports that are convincing enough to fool leading experts.

It’s an open question whether malicious actors will use these types of systems in the future to automate malware creation at scale. For that reason, Mike Cook, an AI researcher at Queen Mary University of London, disputes the idea that AlphaCode brings the industry closer to “a problem-solving AI.”

“I think this result isn’t too surprising given that text comprehension and code generation are two of the four big tasks AI have been showing improvements at in recent years … One challenge with this domain is that outputs tend to be fairly sensitive to failure. A wrong word or pixel or musical note in an AI-generated story, artwork, or melody might not ruin the whole thing for us, but a single missed test case in a program can bring down space shuttles and destroy economies,” Cook told VentureBeat via email. “So although the idea of giving the power of programming to people who can’t program is exciting, we’ve got a lot of problems to solve before we get there.”

If DeepMind can solve these problems — and that’s a big if — it stands to make a cozy profit in a constantly-growing market. Of the practical domains the lab has recently tackled with AI, like weather forecasting, materials modeling, atomic energy computation, app recommendations, and datacenter cooling optimization, programming is among the most lucrative. Even migrating an existing codebase to a more efficient language like Java or C++ commands a princely sum. For example, the Commonwealth Bank of Australia spent around $750 million over the course of five years to convert its platform from COBOL to Java.

“I can safely say the results of AlphaCode exceeded my expectations. I was skeptical because even in simple competitive problems it is often required not only to implement the algorithm, but also (and this is the most difficult part) to invent it,” Codeforces founder Mike Mirzayanov said in a statement. “AlphaCode managed to perform at the level of a promising new competitor. I can’t wait to see what lies ahead.”

轉貼自Source: dataconomy.com

若喜歡本文,請關注我們的臉書 Please Like our Facebook Page: Big Data In Finance

留下你的回應

以訪客張貼回應