摘要: How deep learning can represent War and Peace as a vector

Applications of neural networks have expanded significantly in recent years from image segmentation to natural language processing to time-series forecasting. One notably successful use of deep learning is embedding, a method used to represent discrete variables as continuous vectors. This technique has found practical applications with word embeddings for machine translation and entity embeddings for categorical variables.

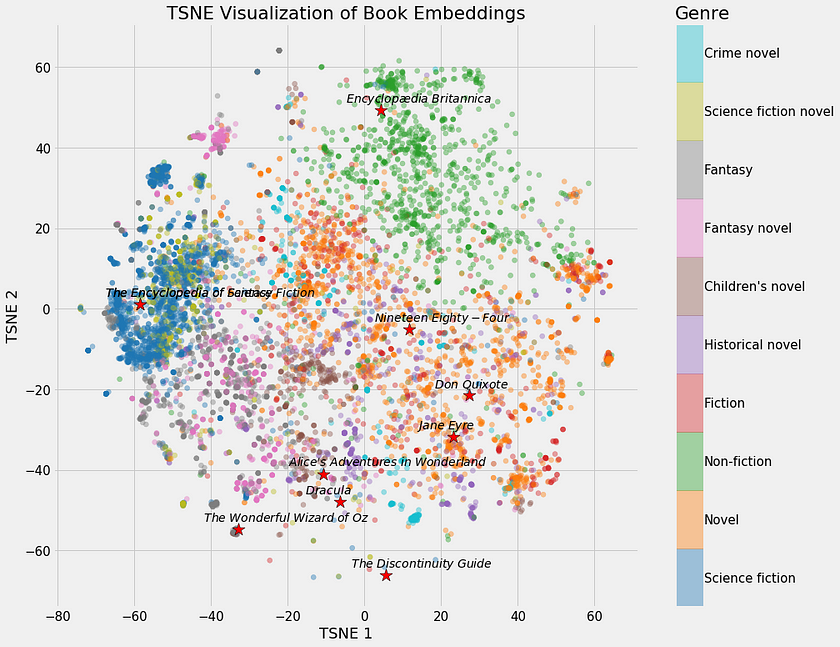

In this article, I’ll explain what neural network embeddings are, why we want to use them, and how they are learned. We’ll go through these concepts in the context of a real problem I’m working on: representing all the books on Wikipedia as vectors to create a book recommendation system.

...

Neural network embeddings have 3 primary purposes:

1.Finding nearest neighbors in the embedding space. These can be used to make recommendations based on user interests or cluster categories. 2.As input to a machine learning model for a supervised task. 3.For visualization of concepts and relations between categories.

...

Full Text: towarddatascience

若喜歡本文,請關注我們的臉書 Please Like our Facebook Page: Big Data In Finance

留下你的回應

以訪客張貼回應