摘要: For the first time ever, a team of researchers from Columbia Engineering has developed a robot that can learn its self-model of its entire body from scratch without the aid of a human.

▲圖片來源:dataconomy

For the first time ever, a team of researchers from Columbia Engineering has developed a robot that can learn its self-model of its entire body from scratch without the aid of a human.

The researchers detail how their robot built a kinematic model of itself and utilized that model to plan actions, accomplish goals, and avoid obstacles in a range of circumstances in a recent paper published in Science Robotics.

A group of scientists from Columbia Engineering have created a robot that, for the first time, is capable of learning its self-model of its whole body from scratch without the assistance of a human. The researchers outline how the robot created a kinematic model of itself in a study that was published in Science Robotics.

As every athlete or fashion-conscious person knows, our impression of our bodies is not always accurate or practical, but it plays an important role in how we behave in society. While you play ball or get ready, your brain is continuously planning for movement so that you can move your body without bumping, tripping, or falling.

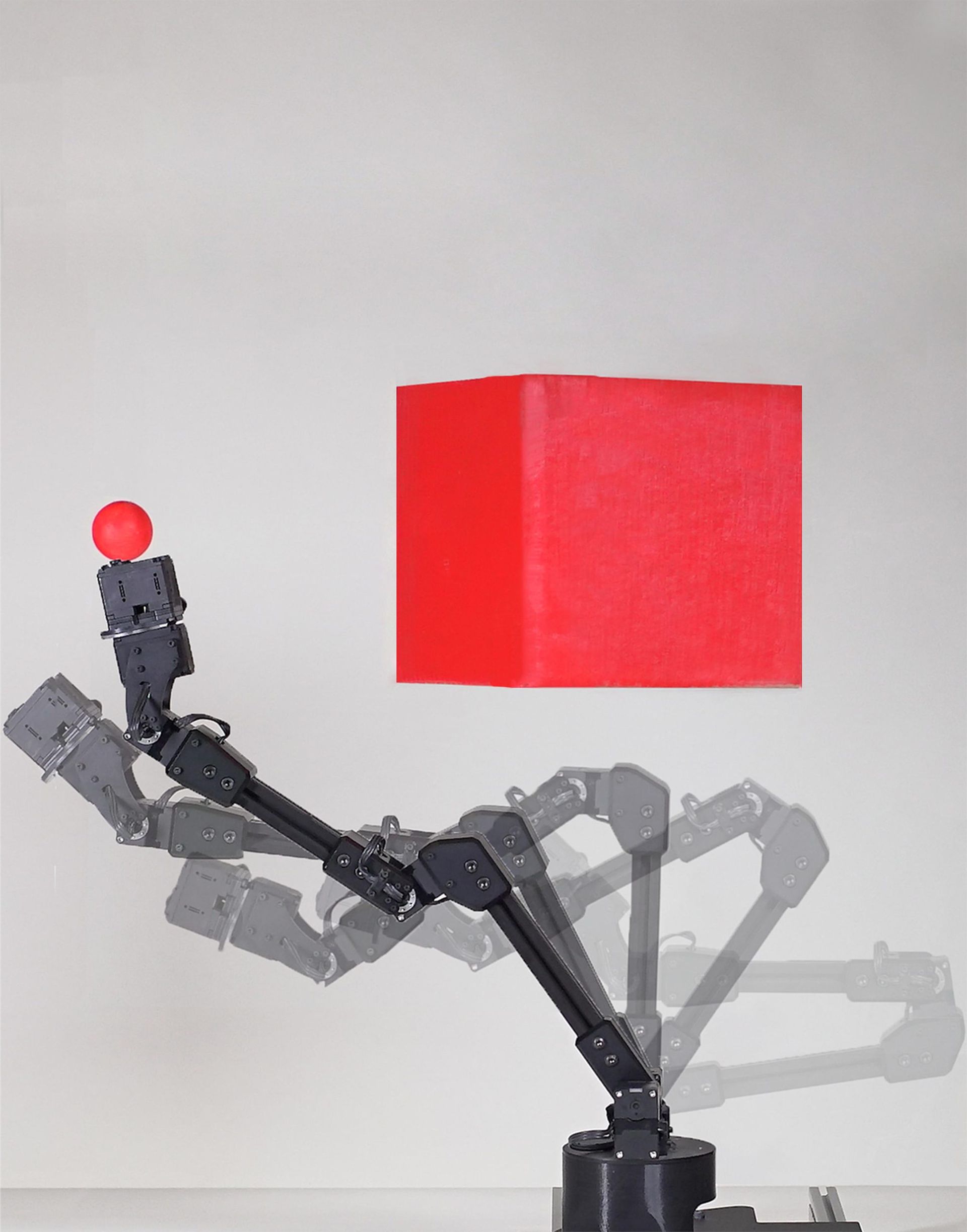

▲Researchers positioned a robotic arm in front of a group of five streaming video cameras(來源:dataconomy)

In their work, the researchers describe how their robot created a kinematic model of itself and used that model to plan motions, achieve goals, and avoid obstacles in a variety of situations. Even physical harm to its body was automatically identified and repaired.

THE ROBOT WATCHES AND LEARNS ITS OWN BODY

Researchers positioned a robotic arm in front of a group of five streaming video cameras. Through the cameras, the robot observed itself as it freely oscillated. The robot squirmed and twisted to discover precisely how its body moved in reaction to various motor inputs, like a baby discovering itself for the first time in a hall of mirrors.

The robot eventually halted after roughly three hours. Its inbuilt deep neural network had finished figuring out how the robot’s movements related to how much space it took up in its surroundings.

Hod Lipson, professor of mechanical engineering and director of Columbia’s Creative Machines Lab said:

“We were really curious to see how the robot imagined itself. But you can’t just peek into a neural network, it’s a black box.”

The self-image eventually came into existence after the researchers tried numerous visualization techniques.

“It was a sort of gently flickering cloud that appeared to engulf the robot’s three-dimensional body. As the robot moved, the flickering cloud gently followed it,” stated Lipson.

The self-model of the robot was precise to 1% of its workspace.

SELF-MODELING ROBOTS WILL LEAD TO AUTONOMOUS SYSTEMS THAT ARE MORE SELF-SUFFICIENT

Robots should be able to create models of themselves without assistance from engineers for a variety of reasons. It not only reduces labor costs but also enables the robot to maintain its own wear and tear, as well as identify and repair the damage. The authors contend that this capability is crucial since increased independence is required of autonomous systems. For example, a factory robot could see that something isn’t moving properly and make adjustments or request assistance.

“We humans clearly have a notion of self. Close your eyes and try to imagine how your own body would move if you were to take some action, such as stretch your arms forward or take a step backward,” said explained the study’s first author Boyuan Chen, who led the work and is now an assistant professor at Duke University. “Somewhere inside our brain we have a notion of self, a self-model that informs us what volume of our immediate surroundings we occupy, and how that volume changes as we move,” he added.

▲The self-model of the robot was precise to 1% of its workspace(來源:dataconomy)

The project is a component of Lipson’s decades-long search for strategies to give robots a semblance of self-awareness. “Self-modeling is a primitive form of self-awareness. If a robot, animal, or human, has an accurate self-model, it can function better in the world, it can make better decisions, and it has an evolutionary advantage,” he said.

The limitations, dangers, and issues associated with providing robots more autonomy through self-awareness are known to the researchers. The level of self-awareness shown in this study is, as Lipson notes “trivial compared to that of humans, but you have to start somewhere. We have to go slowly and carefully, so we can reap the benefits while minimizing the risks.”

轉貼自: dataconomy

若喜歡本文,請關注我們的臉書 Please Like our Facebook Page: Big Data In Finance

留下你的回應

以訪客張貼回應