摘要: In addition to the well-known security challenges faced by devops teams, organizations also need to consider a new source of security challenges: machine learning (ML).

▲圖片來源:leackstat.com

Given the complexity, sensitivity and scale of the typical enterprise’s software stack, security has naturally always been a central concern for most IT teams. But in addition to the well-known security challenges faced by devops teams, organizations also need to consider a new source of security challenges: machine learning (ML).

ML adoption is skyrocketing in every sector, with McKinsey finding that by the end of last year, 56% of businesses had adopted ML in at least one business function. However, in the race to adoption, many are encountering the distinct security challenges that come with ML, along with challenges in deploying and leveraging ML responsibly. This is especially true in more recent contexts where machine learning is deployed at scale for use-cases that involve critical data and infrastructure.

Security concerns for ML become particularly pressing when the technology is operating in a live enterprise environment, given the scale of potential disruption posed by security breaches. All the while, ML also needs to integrate into the existing practices of IT teams and avoids being a source of bottlenecks and downtime for the enterprise. With the principles governing responsible use of AI, this means teams are changing their practices to build robust security practices into their workloads.

The rise of MLSecOps

To address these concerns, there’s a drive among machine learning practitioners to adapt the practices they have developed for devops and IT security for the deployment of ML at scale. This is why professionals working in industry are building a specialization that integrates security, devops and ML—machine learning security operations, or ‘MLSecOps’ for short. As a practice, MLSecOps works to bring together ML infrastructure, automation between developer and operations teams and security policies.

But what challenges does MLSecOps actually solve? And how?

The rise of MLSecOps has been encouraged by the growing prominence of a broad set of security challenges facing the industry. To give a sense of the scope and the nature of the problems that MLSecOps has emerged in response to, let’s cover two in detail: access to model endpoints and supply chain vulnerabilities.

Model access

There are major security risks posed by various levels of unrestricted access to machine learning models. The first and more intuitive level of access to a model can be defined as “black-box” access, namely being able to perform inference on the ML models. Although this is key to ensuring models are consumed by various applications and use-cases to produce business value, unrestricted access to consume predictions to a model can introduce various security risks.

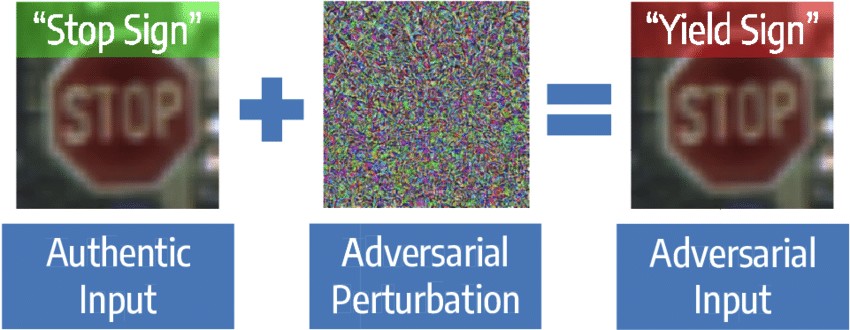

An exposed model can be subject to an “adversarial” attack. Such an attack sees a model reverse-engineered to generate “adversarial examples,” which are inputs to the model with added statistical noise. This statistical noise serves to cause a model to misinterpret an input and predict a different class to the one that would be intuitively expected.

A textbook example of an adversarial attack involves a picture of a stop sign. When adversarial noise is added to the picture, it can trick an AI-powered self-driving car to believe it’s a different sign entirely — such as a “yield” sign — whilst still looking like a stop sign for a human.

▲Example of a common adversarial attack on image classifiers. Image by by Fabio Carrara, Fabrizio Falchi, Giuseppe Amato (ISTI-CNR), Rudy Becarelli and Roberto Caldelli (CNIT Research Unit at MICC ‒ University of Florence) via ERCIM.(來源:leackstat.com)

Then there’s “white-box” model access, which consists of access to a model’s internals, at different stages of the machine learning model development. At a recent software development conference, we have showcased how it is possible to inject malware into a model, which can trigger arbitrary and potentially malicious code when deployed to production.

There are other challenges that can arise around data leakage. Researchers have successfully been able to reverse engineer the training data from the internal learned weights of a model, which can result in sensitive and / or personally identifiable data being leaked, potentially causing significant damage.

Supply chain vulnerabilities

Another security concern facing ML is one that much of the software industry is also confronting, which is the issue of the software supply chain. Ultimately, this issue comes down to the fact that an enterprise IT environment is incredibly complex and draws on many software packages to function. And often, a breach in a single one of these programs in an organization’s supply chain can compromise an otherwise entirely secure setup.

In a non-ML context, consider the 2020 SolarWinds breach that saw vast swathes of the U.S. federal government and corporate world breached via a supply chain vulnerability. This has prompted increased urgency to harden the software supply chain across every sector, especially given open-source software’s role in the modern world. Furthermore, even the White House is now hosting top-level summits on the concern.

Just as supply chain vulnerabilities can induce a breach in any software environment, they can also attack the ecosystem around an ML model. In this scenario, the effects can be even worse, especially given how much ML relies on open-source advancements and how complex models can be, including the downstream supply chain of libraries that they require to run effectively.

For example, this month it was found that the long-established Ctx Python package on the PyPI open-source repository had been compromised with information-stealing code, with upwards of 27,000 copies of the compromised packages being downloaded.

With Python being one of the most popular languages for ML, supply chain compromises such as the Ctx breach are particularly pressing for ML models and their users. Any maintainers, contributors or users of software libraries would have experienced at some point the challenges posed by second, third, or fourth or higher level dependencies that libraries bring to the table — for ML, these challenges can become significantly more complex.

Where does MLSecOps come in?

Something shared by both the above examples is that, while they are technical problems, they don’t need new technology to be addressed. Instead, these risks can be mitigated through existing processes and employees by placing high standards on both. I consider this to be the motivating principle behind MLSecOps — the centrality of strong processes to harden ML for production environments.

For example, while we’ve only covered two high-level areas specific to the ML models and code, there are also a vast array of challenges around ML system infrastructure. Best practices in authentication and authorization can be used to protect model access and endpoints and ensure they’re only employed on a need-to-use basis. For example, access to models can leverage multi-level permission systems, which can mitigate the risk of malicious parties having both black-box and white-box access. The role of MLSecOps, in this case, is to develop strong practices that harden model access while minimally inhibiting the work of data scientists and devops teams, allowing teams to operate much more efficiently and effectively.

The same goes for the software supply chain, with good MLSecOps asking teams to build in a process of regularly checking their dependencies, update them as appropriate and act quickly the moment a vulnerability is raised as a possibility. The MLSecOps challenge is to develop these processes and build them into the day-to-day workflows of the rest of the IT team, with the idea of largely automating them to reduce time spent on manually reviewing a software supply chain.

There is also a vast array of challenges around the infrastructure behind ML systems. But what these examples have hopefully shown us is this: while no ML model and its associated environment can be made unhackable, most security breaches only happen because of a lack of best practice at various stages of the development lifecycle.

The role of MLSecOps is to introduce security intentionally in the infrastructure that oversees the end-to-end machine learning lifecycle, including the ability to identify what those vulnerabilities are, how they can be remedied and how these remedies can fit in with the day-to-day lives of team members.

MLSecOps is an emerging field, with people working in and around it continuing to explore and define the security vulnerabilities and best practices at each stage of the machine learning lifecycle. If you’re an ML practitioner, now’s an excellent time to contribute to the ongoing discussion as the field of MLSecOps continues to develop.

轉貼自Source: leackstat.com

若喜歡本文,請關注我們的臉書 Please Like our Facebook Page: Big Data In Finance

留下你的回應

以訪客張貼回應