摘要: When teams start on a project for machine learning, you need to set your problem to a single real number evaluation metric. Let us look at one case.

If you are tuning hyper-parameters, or attempting various ideas for Learning algorithms, or trying out various options for constructing your learning machine. You will notice your development would be much quicker if you have Single Number Evaluation Metric that quickly lets you tell whether the unknown thing you are doing works better than the last idea, or worse. So when teams start on a project for machine learning, you need to set your problem to a single real number evaluation metric. Let us look at one case.

▲圖片來源:medium.com

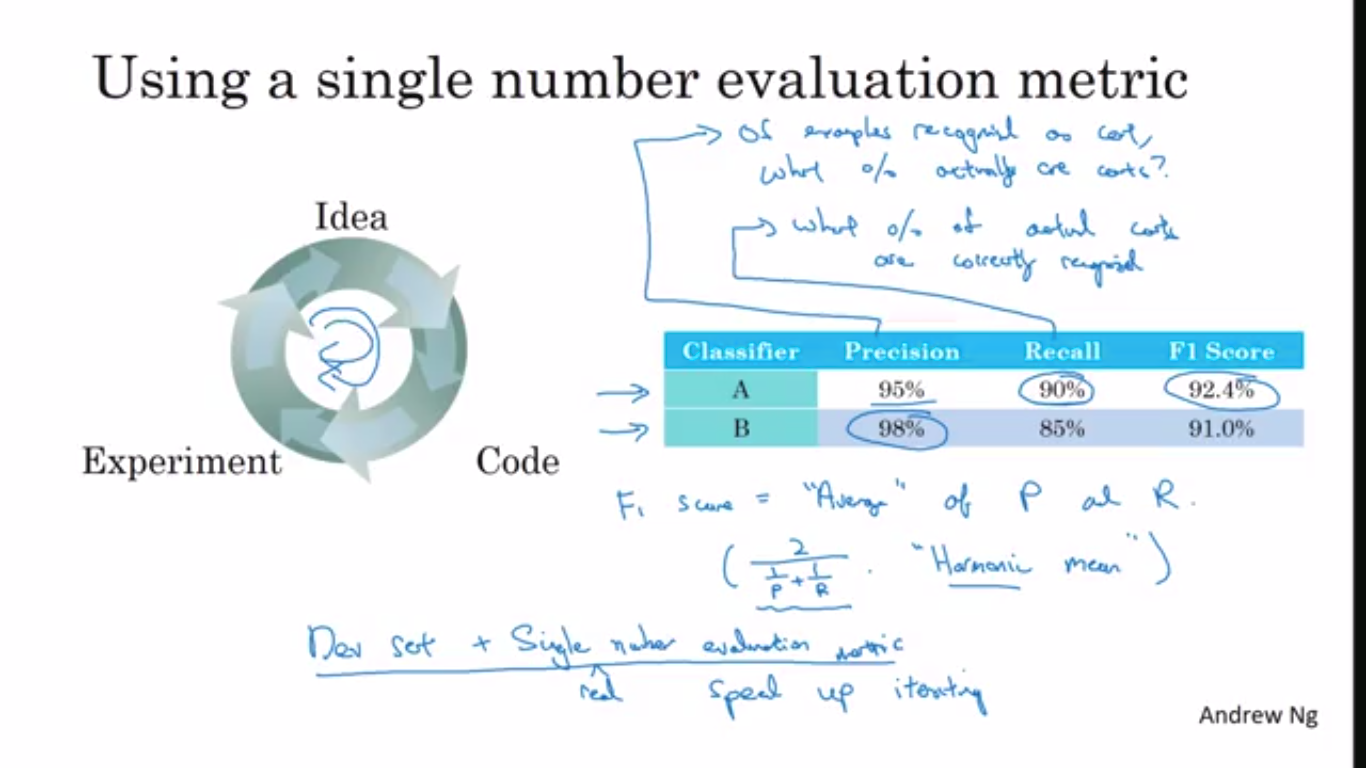

We often get an idea, code it, run the experiment to see how it worked and then use the experiment’s findings to improve your thinking. And then keep going around that loop as you continue to improve your algorithm. So let’s assume you ‘d previously created some classifier A for your classifier. And by changing the training sets and the hyper-parameters or Another thing, now you’ve trained a new classifier, B. So one fair way to determine the classifier ‘s performance is to look at its accuracy and recall.

So if classifier A has 95% precision, this means that when classifier A says something is a cat, there’s a 95% chance it really is a cat. So if classifier A is 90% recall, this means that of all the images in, say, your dev sets that really are cats, classifier A accurately pulled out 90% of them. The problem with using precision recall as your evaluation metric is that if classifier A does better on recall, which it does here, the classifier B does better on precision, then you’re not sure which classifier is better.

And with two evaluation metrics, it is difficult to know how to quickly pick one of the two or quickly pick one of the ten. So what I recommend is rather than using two numbers, precision and recall, to pick a classifier, you just have to find a new evaluation metric that combines precision and recall.

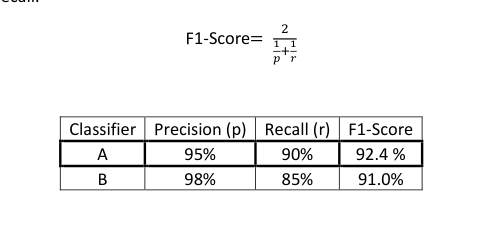

In the literature on machine learning, the standard way of combining precision and Recall is what’s called an F1 score. Here, however, immediately, you can see that classifier A has a better F1 score. And assuming F1 score is a reasonable way to combine accuracy with recall; Then you can easily pick Classifier A over Classifier B.

▲圖片來源:medium.com

F1-Score is not the only evaluation metric that can be used, the average, for example, could also be an indicator of which classifier to use.

Evaluation metric allows you to tell quickly if classifier A or classifier B is better and therefore having a dev set plus single number evaluation metric distance to speed up iterating. It speeds up this iterative process of improving your machine learning algorithm.

轉貼自Source: medium.com

若喜歡本文,請關注我們的臉書 Please Like our Facebook Page: Big Data In Finance

留下你的回應

以訪客張貼回應